The Orchestra Analogy: Learning Without Starting from Silence

Imagine an orchestra preparing for a grand performance. Each musician has mastered a specific piece, honed by countless rehearsals. Now, a new symphony arrives—different, yet composed in the same genre. Rather than learning every note from scratch, the musicians adapt their prior knowledge to this new melody, fine-tuning tempo and harmony to fit the new rhythm. This is how transfer learning operates in the world of machine learning—using the symphony of prior experience to master new, related compositions.

The technique saves not only time but also immense computational effort, just as seasoned musicians don’t relearn their instruments for every new score. In the same way, data scientists and engineers across industries, including graduates of an AI course in Kolkata, rely on pre-trained models to jumpstart innovation across domains like healthcare, natural language processing, and robotics.

From Blank Canvas to Brushstroke: The Power of Pre-Trained Models

Traditional machine learning is like painting on a blank canvas—each colour mixed from scratch, each brushstroke uncertain. Transfer learning, however, offers an almost-complete painting, requiring only touch-ups to bring it to life. Models like VGGNet, ResNet, and BERT have already absorbed vast patterns from millions of data points, forming robust representations of vision, language, or sound.

By using these pre-trained foundations, engineers only need to adapt the model to a narrower task—say, recognising skin lesions instead of generic objects. This selective training makes the process exponentially faster, requiring less data and computational resources. The result? Greater efficiency, less environmental impact, and better scalability. It is this balance of precision and pragmatism that makes transfer learning the artist’s shortcut in artificial intelligence.

The Bridge Between Worlds: Understanding Domain Adaptation

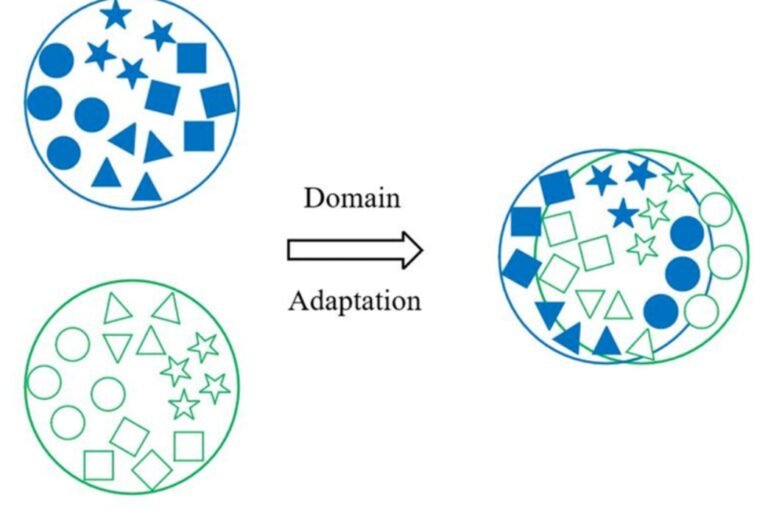

Now picture a mountaineer crossing from one terrain to another—say, from rocky hills to icy slopes. The skills remain the same, but the grip, balance, and rhythm must shift. Domain adaptation functions much like this transition. It allows a model trained in one environment (the source domain) to perform effectively in another (the target domain) where data characteristics differ.

For example, a sentiment analysis model trained on movie reviews might falter when analysing product reviews, even though both involve text. Domain adaptation techniques—such as adversarial training or instance re-weighting—help align these two domains, making the model flexible enough to navigate the new linguistic landscape.

In research labs and applied AI settings, domain adaptation bridges the gap between academic models and real-world deployment, where data is rarely neat, labelled, or uniform. This adaptability transforms machine learning systems into true explorers of uncharted territories.

Stories of Speed: How Transfer Learning Accelerates Innovation

When Google introduced its Inception networks, engineers could fine-tune them to identify new image categories in hours instead of weeks. In medicine, researchers reused neural networks trained on ImageNet to detect diabetic retinopathy, significantly reducing the need for extensive labelled datasets. In natural language processing, models like BERT and GPT revolutionised understanding of text by transferring knowledge from generic corpora to specific contexts like legal documents or financial reports.

Each of these milestones shares a common principle: reuse accelerates discovery. Instead of reinventing the wheel, scientists refine it for different roads. The method echoes the wisdom of human learning—we build on what we know, adapting lessons from one experience to another. This approach, now integral to curricula in many advanced programs including the AI course in Kolkata, helps learners understand not only how models learn but how they transfer their intelligence across tasks.

The Subtle Craft of Fine-Tuning: Balancing Old and New Knowledge

Fine-tuning, the final act of transfer learning, is akin to tuning an instrument for a new concert hall. The goal is not to erase the old harmony but to adjust it to resonate with a new environment. During fine-tuning, only specific layers of a pre-trained model are retrained on the new dataset, allowing it to retain its general knowledge while adapting to task-specific nuances.

However, fine-tuning demands precision. Overtraining can make the model forget its foundational strengths—a phenomenon known as catastrophic forgetting. Undertraining, on the other hand, may leave it too rigid to perform well on the new domain. Striking the right balance requires both computational insight and human intuition, turning the process into an art as much as a science.

Looking Ahead: The Future of Reusable Intelligence

Transfer learning and domain adaptation represent the next chapter in AI’s evolution—from isolated learning to cumulative intelligence. Future models will not only learn faster but also share knowledge more seamlessly across languages, modalities, and industries. Imagine an AI system that understands patterns from healthcare, applies them to agriculture, and then refines them for climate modelling.

This interconnected ecosystem will make artificial intelligence more sustainable, equitable, and accessible. By leveraging pre-trained models, we move closer to a world where machines learn as humans do—by remembering, adapting, and transferring wisdom.

Conclusion: From Knowledge to Insight

The true genius of transfer learning lies in its mimicry of human cognition. We rarely start from zero. Whether learning to play a new instrument or mastering a new language, our previous experiences inform the new. AI is now learning this same rhythm—drawing upon past training to accelerate future performance.

For students and professionals venturing into artificial intelligence, understanding transfer learning and domain adaptation is not just technical literacy—it is creative empowerment. As many discover through an AI course in Kolkata, these methods redefine what it means to learn, showing that intelligence, human or artificial, thrives not in isolation but in connection.